Scanner

2011

I developed 360-degree, 3D scanner as a student for my BFA in Sculpture. Though at the time it was an eccentric capstone for a sculpture degree, I look back on this project as early exploration and play with concepts now core to my work: digital twins, computer-perception, the relationship between the solid world and a softer one.

In 2011, digitally scanning objects and environments was challenging. The tools and pipelines didn't exist. Out of a desire to quickly scan interior environments, I developed a 360-degree laser scanner, built for under $30 with Arduino and Processing, and published as open-source. The scanner produced not-super-accurate but recognizable reconstructions of environments. This became an image-making tool for me for the next year and a half, as I explored as an artist the role of imagination in perceiving spaces.

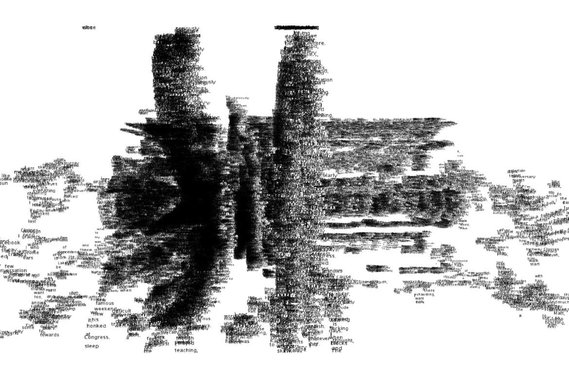

Image made by plotting words from a text file in 3D space according to the spatial data acquired by the scanner.

The scanner’s hardware involves a webcam placed at the center of a motorized turntable and an attached laser level. The device rotates the webcam and laser light in 360 degrees. Scans have to be performed while the room is dark so the light is picked up clearly. Processing / Open CV based software calculate depth offsets based on detecting this laser light in each frame. Point clouds are exported as the room "un-wrapped".